-

- Downloads

Completed on-line documentation.

Showing

- src/site/markdown/design/digital-elevation-model.md 55 additions, 0 deletionssrc/site/markdown/design/digital-elevation-model.md

- src/site/markdown/design/overview.md 51 additions, 1 deletionsrc/site/markdown/design/overview.md

- src/site/markdown/design/preliminary-design.md 122 additions, 0 deletionssrc/site/markdown/design/preliminary-design.md

- src/site/markdown/design/technical-choices.md 213 additions, 0 deletionssrc/site/markdown/design/technical-choices.md

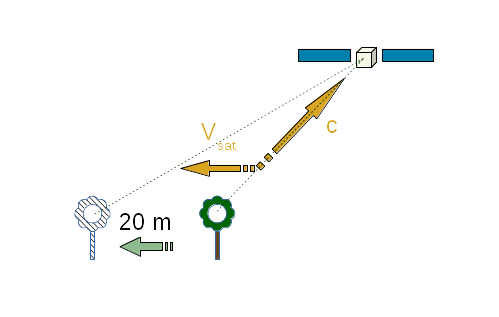

- src/site/resources/images/aberration-of-light-correction.png 0 additions, 0 deletionssrc/site/resources/images/aberration-of-light-correction.png

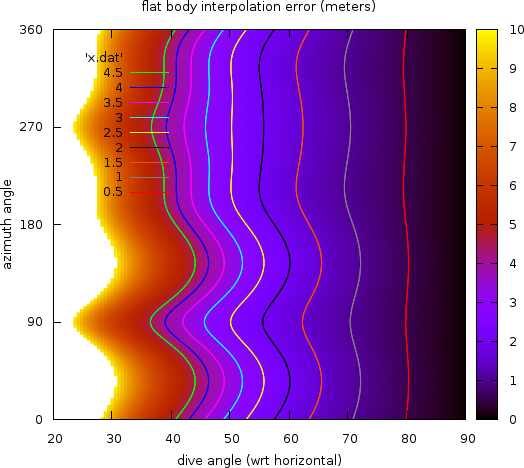

- src/site/resources/images/flat-body-interpolation-error.png 0 additions, 0 deletionssrc/site/resources/images/flat-body-interpolation-error.png

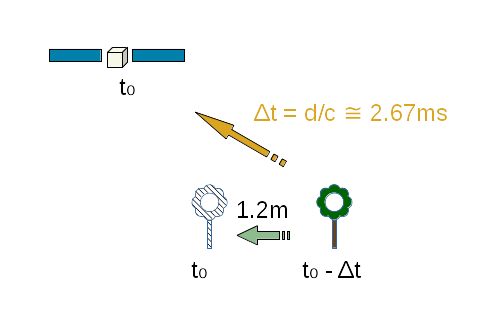

- src/site/resources/images/light-time-correction.png 0 additions, 0 deletionssrc/site/resources/images/light-time-correction.png

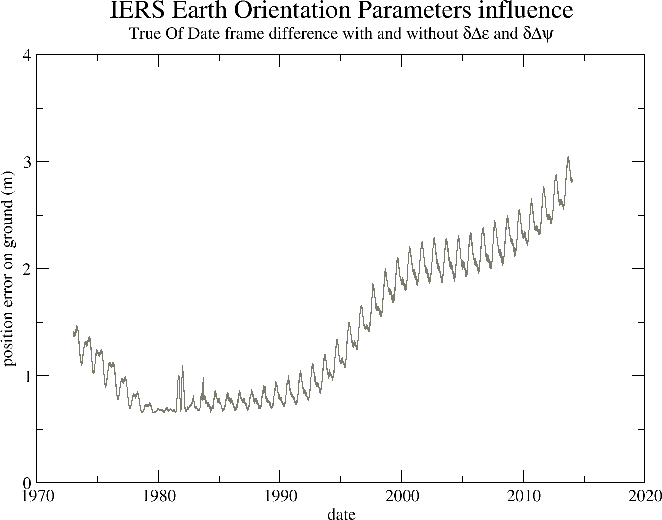

- src/site/resources/images/precession-nutation-error.png 0 additions, 0 deletionssrc/site/resources/images/precession-nutation-error.png

- src/site/site.xml 15 additions, 12 deletionssrc/site/site.xml

26 KiB

137 KiB

24.1 KiB

7.15 KiB